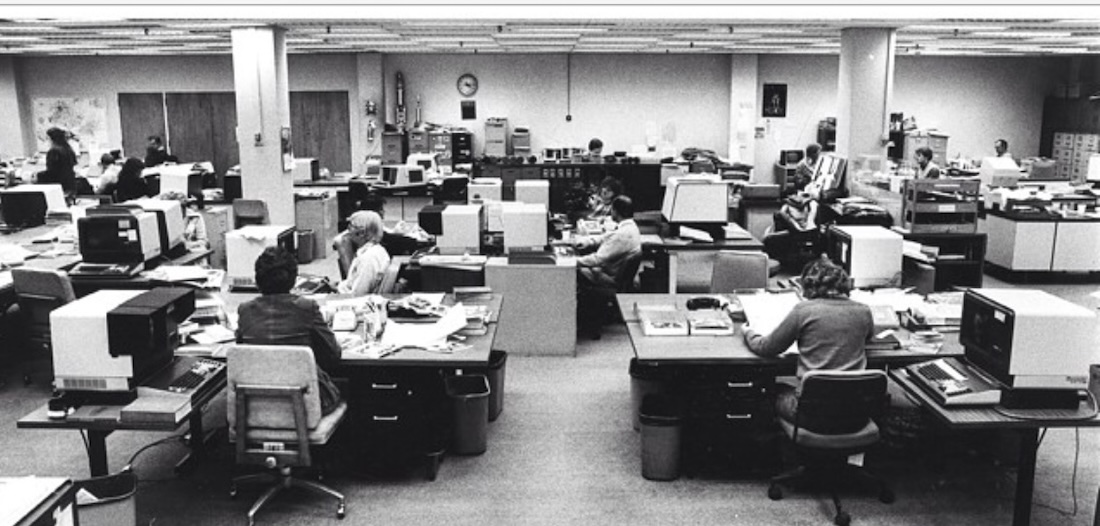

When I joined The Daily Oklahoman sports desk in the fall of 1983, it was a black and white world. Published photographs were all black and white. The newspaper pages themselves were black and white (and read all over!).

It’s hard to overstate the impact the newspaper had on Oklahoma City and beyond. The paper’s circulation was north of 200,000 and we circulated in every county in the state. When someone wanted a sports score or a football recruiting update, or to complain about a headline, they called the paper.

I know because I was on the receiving end of those calls a lot.

We drew our pages by hand on sheets of paper, which were sent down to the composing room to be put together by a large crew of folks. There was an often frenetic scene in composing as people rushed around at a frantic pace on deadline, shouting ‘we need a cut!’ for an editor to help them splice story that was too long as it was pasted on the page.

Some nights I acted as a copy editor on the sports desk, working to smooth the rough edges off of copy, as well as writing headlines. Other nights, I actually drew the pages, although I had such a lack of artistic vision that my pages usually looked as if they were part of a third-grade art project.

Anyway, desktop publishing was introduced in the newsroom in 1987. Our jobs dramatically changed, as we ‘drew’ our pages on a computer screen and they came out of the printers as one compete sheet instead of having to be pasted down by hand. There were far fewer people needed in the composing room.

Desktop publishing required more people to work on the sports desk to lay out individual pages. We were a little community that endured the stressful race to make three deadlines a night, often remaking much of the sports section for each deadline.

As desktop publishing became more sophisticated, one of my co-workers on the sports desk saw what was coming. He predicted that technology would advance so far that copy editors would all be replaced some day by ‘automatic headline writers.’

Doug Simpson, you saw where things were headed 30 years before it became reality. In fact, a recent Google search revealed dozens of sites offering AI headline writing, including the popular “Grammarly” site that offers a ‘free headline generator.’

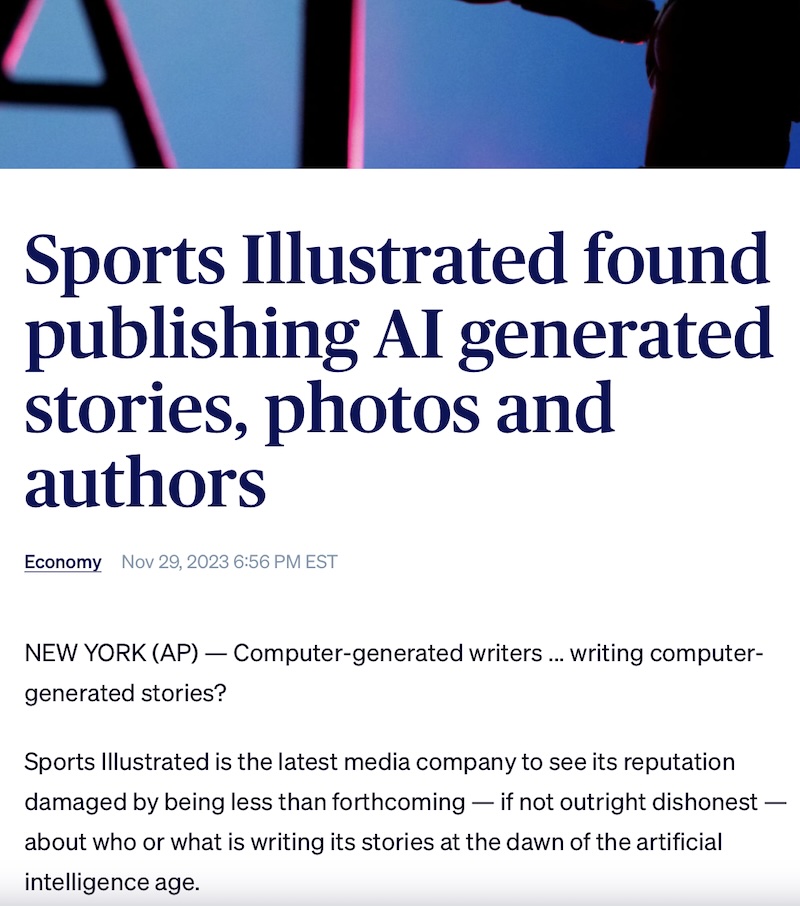

There has been ongoing controversy over news sites that use AI to write their actual copy, including a disturbing story from 2023 about some Sports Illustrated copy generated by AI. Sports Illustrated was once considered the Gold Standard of sports writing.

Today, we’re seeing predictions every day about how AI will replace millions of jobs worldwide. We’ve seen some of it become reality locally when Paycom cut 500 jobs and attributed it to artificial intelligence.

So where will it all end? Will artificial intelligence really displace millions of white collar jobs, including the software coding jobs that created AI in the first place?

Call me a skeptic, but I can’t see AI replacing airline pilots, health care professionals or school teachers, among many other professions.

But then, I think back to what my newspaper colleague Doug predicted more than three decades ago. There ARE automatic headline writers in 2026.

So, I’ll close this with a quote from one of my favorite movies, Caddyshack, which reflects the attitude of our AI overlords. It’s a scene where caddy Danny Noonan confesses to Judge Smalls during a round of golf that he might not be able to go to college because his parents don’t have enough money.

“Well, the world needs ditch diggers, too,” the judge tells Danny as he walks away.

Ouch.